# KUDO Performance Improvement with a Surprise

This started as a straightforward single-character change. Bump the KUDO Cassandra operator (opens new window)'s dependency on KUDO controller from v0.11.0 to v0.11.1 to pick up its recent performance (opens new window) improvements (opens new window).

What could possibly go wrong, right?

Well, Hyrum's Law (opens new window) made sure it's being remembered, and this seemingly simple bump required quite a bit of debugging and a workaround to succeed. This post describes this investigation, the cause of the issue and the workaround.

TL;DR: the performance improvements mentioned above caused the KUDO controller to always win a certain race condition. This turned a deficiency in stateful set controller (opens new window) into a deadlock.

Reading time: 00:05:00

# What is KUDO and KUDO-Cassandra?

Kubernetes Universal Declarative Operator (opens new window) is a toolkit that makes it easy to build Kubernetes Operators (opens new window), in most cases just using YAML.

One of the operators built for KUDO (opens new window) is one for running Apache Cassandra (opens new window). Its source code repository (opens new window) contains a few test suites (opens new window) which verify that certain features work as expected.

One of these suites, which verifies various TLS configuration options (opens new window), performs the following sequence of steps three times, in quick succession:

Install

cassandraOperator,OperatorVersionandInstanceresources. See KUDO docs (opens new window) if you'd like to know what these resources do.Wait for the

Instanceto become healthy.An important detail is that the instance in turn creates a stateful set, here named

cassandra-instance-node. This stateful set runs two pods, calledcassandra-instance-node-X, each of which requires an auto-provisioned persistent volume.Remove (opens new window) the

cassandraOperator,OperatorVersionandInstanceresources.Remove (opens new window) both

PersistentVolumeClaimresources, if they exist.

Each time the above sequence is performed, it is with a slightly different Cassandra configuration, but that is irrelevant to the subject of this post.

# The Failure

After bumping the version of KUDO controller used when running the tests, it turned out that this test always failed. The failure was a timeout waiting for install (step 1 above) to finish but never on the first execution of this sequence.

Initial inspection showed that the cassandra-instance-node-1 pod failed to schedule due to a missing persistent volume.

# The Investigation

# First Dead-End

I suspected that the reason I'm seeing the failure so reliably is that the timing changed significantly in the newer KUDO version.

I initially attributed this to a bug in the PVC protection controller (opens new window) that was present in k8s 1.15 that KUDO cassandra was using, because the last related Event that caught my eye mentioned this controller.

Unfortunately the Events logged by various controllers were not enough to confirm or disprove the theory that this was the root cause.

It was only after a more careful and organized debugging session that I understood what is going on, and realized that the PVC protection controller was working as intended in this case.

# Inspecting the Audit Log

The tool which did contain all the necessary information was the kube-apiserver audit log.

Luckily audit logging was already turned on by default by konvoy (opens new window) - the platform we use to deploy k8s - and the CI cleanup step already saved the log dumped by konvoy diagnose.

I only needed to improve my jq skills to be able to understand what it was recording.

First I aggreated the log from three master nodes into a single chronological one with:

cat */kube-apiserver-audit.log | \

jq -c '[.requestReceivedTimestamp,.]' | \

sort | \

jq -c '.[1]' > api-log.json

The first jq command above prepends the timestamp to each log record, and prints them in a compact form, suitable for piping into sort.

The second jq invocation simply strips that timeout and pretty-prints the output.

Next, since I knew the problem was somewhere around Pods, PersistentVolumes and PersistentVolumeClaims, I looked at a chronological overview of mutations of these kinds with something like the following - refining the query step by step as I focused more and more closely on the interesting part:

cat api-log.json | jq '

select(

(.objectRef.resource=="pods" and .objectRef.name=="cassandra-instance-node-1")

or

(.objectRef.resource=="persistentvolumeclaims" and .objectRef.name=="var-lib-cassandra-cassandra-instance-node-1")

or

(.objectRef.resource=="persistentvolumes" and .objectRef.name=="pvc-2aaa4cff-ec2b-47ef-9740-e36778265492")

or

(.objectRef.resource=="statefulsets" and .objectRef.name=="cassandra-instance-node")

or

(.objectRef.resource=="instances" and .objectRef.name=="cassandra-instance"))' | \

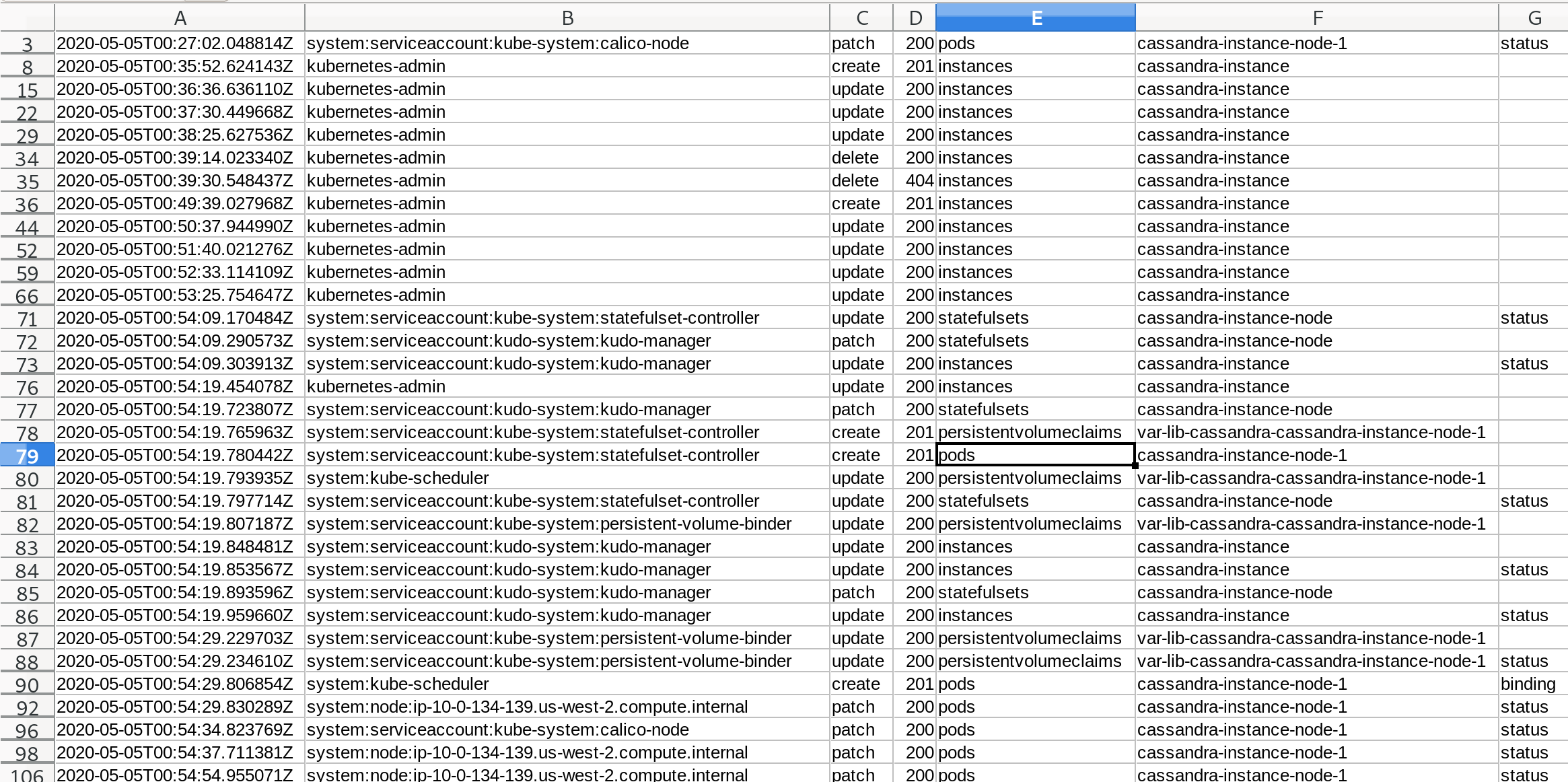

jq -r '[.requestReceivedTimestamp,.user.username,.verb,.responseStatus.code,.objectRef.resource,.objectRef.name,.objectRef.subresource?]|@csv' > report.csv

The first jq command applies various filters on the object reference, and the latter selects the interesting fields and prints them in CSV format.

I used LibreOffice CSV import for viewing and annotating the output in a clear tabular format.

Other useful filters:

by actor:

select(.user.username=="kubernetes-admin" or .user.username=="system:serviceaccount:kudo-system:kudo-manager")by time:

select(.requestReceivedTimestamp >= "2020-04-06T06:37:23" and .requestReceivedTimestamp <= "2020-04-06T06:37:51")

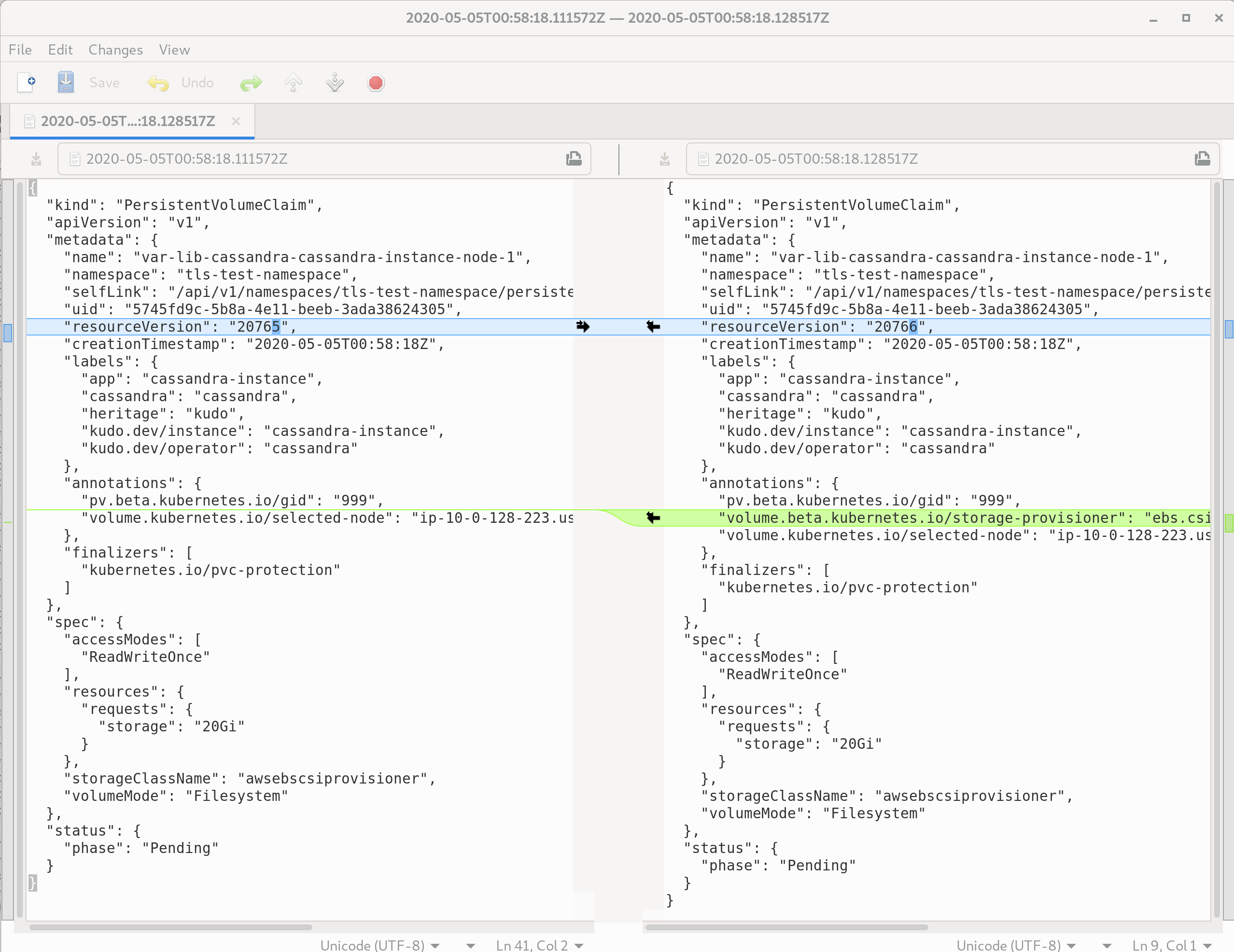

One can also change the latter jq invocation to pretty-print individual records into files by selecting their exact timestamp.

jq 'select(.requestReceivedTimestamp=="2020-04-06T06:37:23.416332Z") |

.responseObject' api-log.json

These can also be compared with a diff viewer such as meld (opens new window), to understand the intents of individual mutations:

# Root Cause

I eventually tracked down the deadlock to happen due to the following sequence of events:

- test code detects a healthy

Instanceand starts teardown: first, itDELETEs theInstance, the operation returns immediately and proceeds in the background, - 7ms later, test code

DELETEs thePVCs, these will also removed in the background when thekubernetes.io/pvc-protectionfinalizer is removed, - 20ms later, the next test starts and the test code

CREATEs a newInstance, - 34ms later, KUDO manager takes over the

StatefulSet(before it is garbage-collected as a result of the above deletion of theInstance) andPATCHes it to point at its new parent instance, with a newkudo.dev/last-plan-execution-uidannotation, - statefulset controller begins to roll out this change:

DELETEs the last pod in the set (cassandra-instance-node-1) - ~30s later the kubelet get rids of the pod,

- 25ms later, now that the pod is gone,

pvc-protection-controllerremoves its finalizer from the PVC, and only now is the PVC eligible for garbage-collection, - 5ms later statefulset controller creates a new pod, but does not do anything the PVC, since it still considers the PVC to be present,

- 14ms later

persistent-volume-bindermarks the PV as released and it is eventually GC-ed

The issue is that now that the pod is created, stateful set controller never considers even taking a look at the PVC, and the pod goes pending forever. This is described (among other things) in https://github.com/kubernetes/kubernetes/issues/74374 and there is even a potential fix (opens new window) waiting for almost a year already...

# The Trigger

I still did not understand exactly how the KUDO version bump tickled this issue.

But finding out was relatively easy now that I knew what tools to use.

I collected the audit log from a CI run on the tip the master branch, which still used KUDO v0.11.0.

It turns out that the sequence looks like this in that case:

- test code detects a healty

Instanceand starts teardown: first, itDELETEs theInstance(same as above), - 84ms later, test code

DELETEs thePVCs (same as above), - 140ms later, the next test starts and the test code

CREATEs a newInstance(same as above), - ~700ms later garbage collector

DELETEs theStatefulSetaltogether, - only 44 seconds later does KUDO manager come around to re-create the statefulset afresh

This time, since the StatefulSet is not modified in-place, the issue is not tickled.

# Conclusion

This issue illustrates how a seemingly innocent improvement in a patch release can have a surprising effect. It also helped me learn some skills useful when trying to understand the complex system that Kubernetes is.

The workaround I employed was to introduce (opens new window) and use (opens new window) a new UninstallWaitForDeletion function in the KUDO Go testing library (opens new window).

This function waits for the Instance resource to be deleted in the foreground.

This way by the time the Instance is created again, its underlying StatefulSet is guaranteed to be gone.